Author: emil

Description:

includes/RawPage.php (line 16):

$smaxage = $this->mRequest->getIntOrNull( 'smaxage', $wgSquidMaxage );

This doesn't correspond to getIntOrNull() function definition in includes/WebRequests.php which has only one parameter.

So if there is no 'smaxage' parameter in the web request then the $smaxage variable is null.

Later on in the code, if the raw page is not generated ('gen' request parameter is not set) then $smaxage is getting 0 which eventually causes a "Cache-Control: private" header to be sent.

Is this a desired behavior? Wouldn't use of getInt( 'smaxage', $wgSquidMaxage ) be better here?

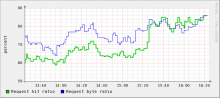

Attached is a patch and a result of it on one wiki where I tested it.

Version: 1.12.x

Severity: trivial