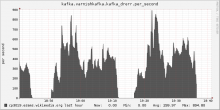

Between, 2014-09-28T18:31:10 and 2014-09-28T20:06:34 all esams bits

caches saw both duplicate and missing lines.

Looking at the Ganglia graphs, it seems we'll see the same issue also

for today (2014-09-29).

While the issue was going on today, there was a discussion

about it in IRC [1].

It is not clear what happened.

The theory up to now is that due to recent config changes around

varnishkafka, esams bits traffic can no longer be handled with 3

brokers (we're currently using only 3 out of 4 brokers).

[1] Starting at 19:04:03 at

http://bots.wmflabs.org/~wm-bot/logs/%23wikimedia-analytics/20140929.txt

Version: unspecified

Severity: normal

See Also:

https://bugzilla.wikimedia.org/show_bug.cgi?id=71882

https://bugzilla.wikimedia.org/show_bug.cgi?id=71881